Why Artificial Intelligence has become more Artificial than Intelligent

The last couple of years have seen a flurry of activity in the tech space around Artificial Intelligence, with OpenAI being the new kid on the block that set the cat (or ChatGPT) among the pigeons in 2022. Suddenly, every major tech company started to launch their own AI-based digital assistants, from Google's Gemini to Microsoft's Copilot, and even Apple's, rather amusingly named, Apple Intelligence. However, a quick look under the hood reveals that it's still not a bed of roses that these assistants had originally been promised to deliver.

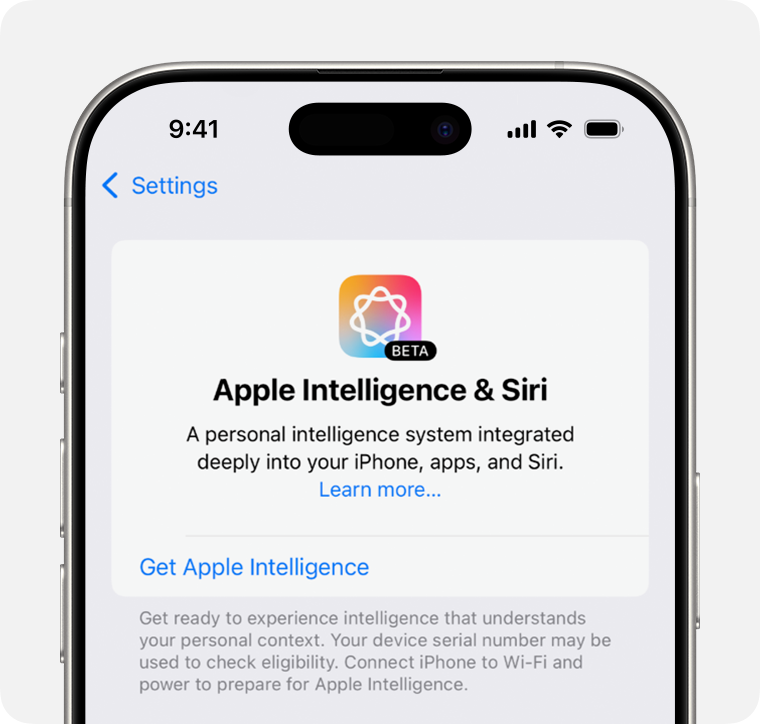

Last week, Apple made a surprise announcement that it is being forced to delay the launch of the Apple Intelligence-based Siri functionalities that were expected to be available in a software update by spring 2025. Below is the official statement from Apple's spokesperson Jaqueline Roy:

Siri helps our users find what they need and get things done quickly, and in just the past six months, we’ve made Siri more conversational, introduced new features like type to Siri and product knowledge, and added an integration with ChatGPT. We’ve also been working on a more personalized Siri, giving it more awareness of your personal context, as well as the ability to take action for you within and across your apps. It’s going to take us longer than we thought to deliver on these features and we anticipate rolling them out in the coming year.

Let's analyze this statement to understand why the practical usability of Artificial Intelligence has failed to meet the hype generated by tech companies. In the first sentence, Apple says that it has made Siri more conversational, but it still struggles to understand user requests and responds with a "Sorry, I didn't get that." or "I'm not sure I understand." more often than not. The new Type to Siri feature doesn't improve upon this basic shortcoming either, as users cannot always type to a digital assistant and doing so defeats the purpose of a voice-based assistant in the first place (yes, it does increase accessibility for certain users). Even the Product Knowledge is only limited to settings and Apple-built apps on Apple devices, which is something a user can easily learn by reading the User Guide (like I do, but I know most of you don't!).

The big piece in this statement is the much publicized integration with ChatGPT, which is OpenAI's large language model that Siri relies on for complex tasks or requests. However, users are asked to choose whether they want ChatGPT to respond to their query, which adds an additional step that makes the user experience less fluid and takes a few more seconds to provide a response.

The more personalized Siri that Apple talks about in the second sentence is what was previewed at their World Wide Developers Conference in June 2024 and used as one of the key features to advertise the iPhone 16 series of smartphones (including the new $599 iPhone 16e), a new line of MacBook laptops and Mac desktops, and new iPad tablet computers. Back then, Apple had promised that Siri would be able to understand users' personal context and seamlessly take actions for them within and across all apps, but we're still waiting to see that happen almost 9 months later. And going by last week's announcement, it looks like we'll have to keep waiting for much longer.

The reasons behind Apple's acceptance of issues with Apple Intelligence can be debated, but it does highlight the fundamental problem with almost all Artificial Intelligence tools at the moment. When the tech companies behind these tools first launched them, we were promised that they would interact with apps on our behalf and do the administrative tasks for us, so that we could spend less time on our devices and more time doing the things that really matter to us (which, incidentally, isn't different from how all software companies position themselves). However, when these companies actually got down to building those tools, they soon realized that it wasn't going to be as easy as they had expected. Since each application has its own unique tech architecture and different operating systems have their own underlying architectures, having multiple digital assistants deliver on the above mentioned promise proved to be extremely challenging. At the same time, tech companies were also building large language models to find a practical application for Artificial Intelligence. OpenAI's ChatGPT emerged as a leader in this space, and both Apple and Microsoft decided to partner with them and integrate their digital assistants (Siri and Copilot, respectively) with ChatGPT. Microsoft even went a step further and invested over $10 billion in OpenAI to bring ChatGPT's functionalities into Microsoft Office 365 applications, the Bing search engine, and the Edge web browser.

I believe this is where our current problem originated from. Large language models are great at proof-reading, rewriting, and summarizing content, as well as suggesting writing styles and generating lists, tables, and even chocolate oatmeal brookies (yes, I tried asking Siri to do this. What's a brookie, you ask?). However, they don't understand personal context that well and can't interact with apps or take actions on behalf of users (yes, I know OpenAI is touting that it's new Operator can do that for you now). And because every tech company started racing towards integrating large language models into their digital assistants, this dream became less of a priority for some and was sort of forgotten by others. That is, until Apple announced its personalized Siri last year and everyone started talking about how that would revolutionize the way we interact with our devices (we already know how that went).

On a positive note, I still think Apple is probably the only tech company that can pull the resources together to fulfill the promise of a personalized digital assistant that understands personal context. While it has admitted that the timelines were a bit overambitious, the goal still remains achievable if it puts its best minds to it (as I'm sure it will). Apple's other projects such as the (failed?) Apple Car, next-gen CarPlay, and Vision Pro have surely given it a lot of important lessons that will definitely be helpful as it develops the next-gen Siri. While I'm excited to see how this project unfolds, I will hold on to my iPhone 13 mini for now and wait for Artificial Intelligence to become more intelligent, so that it can feel a bit less artificial.

Comments

Post a Comment